Réjean Plamondon kindly sent me his analysis software to play with, and I have three experiments worth of data I am currently analysing in an effort to assess whether it can help me find what I want. Here I'll briefly review the model, the experiments and some lessons I've learned training myself to write with my nondominant right hand.

The Lognormal Model of Handwriting

The lognormal model takes a digital recording of some handwriting, like the below letter 'x', and tries to simulate the velocity profile of the recording using only lognormal curves.

|

| Figure 1. A 130Hz recording of someone writing the letter 'x' on a Wacom tablet |

|

| Figure 2. A lognormal curve |

The lognormal model assumes that everything went fine, and therefore tries to model the velocity profiles of the strokes making up the letters using only lognormal curves. This only works, of course, if the letter is well formed; so various model fit parameters now give you a measure of how well formed the letter was.

There are three numbers; nbLog (the number of lognormal curves it took to reach a criterion fit), SNR (the signal-to-noise ratio, a measure of the fit of the resulting simulation to the data) and SNR/nbLog, a ratio that penalises the SNR as a function of the number of lognormal functions required to achieve it (you can produce a perfect fit if you allow yourself an infinite number of lognormal elements, which is obviously not that useful). The only DV I think is interesting is the third one, SNR/nbLog.

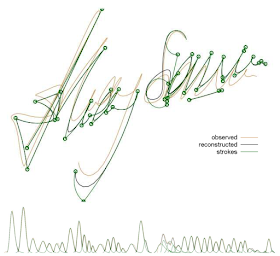

The end result looks something like Figure 3, and the various DVs can be statistically analysed in straight forward ways.

|

| Figure 3. Reconstructed trace and velocity profile of an on-line signature. From Fisher & Plamondon, 2015 |

My interest is in whether this analysis provides numbers that could be computed by software and presented in some format to a clinician or teacher working with someone on their handwriting. I want to know a) how reliable the measures are within a person, b) how variable they are across people, and c) are they sensitive enough to detect changes after a period of training? Data analysis takes ages and I'm having to redo some with an updated version of the software, so I don't have numbers yet; I'll add a post when I do.

Experiment 1 simply asked participants to write all the letters of the alphabet ten times, one at a time in a random order. I will be assessing the within and between participant variability, to get a sense of the basic noise I am up against in other analyses.

Experiment 2 (ably run by an undergraduate, Faith Marsh, doing her final year project with me) was a training study. Faith took baseline recordings of people writing letters and the pangram "The quick brown fox jumped over the lazy dog" with both their dominant and nondominant hands. She then had people take packs home and practice writing every day with their nondominant hand (to avoid a ceiling effect; she made ~250 word texts with weird trivia in an effort to keep people entertained) and then repeated her measures at post training. Faith invented the training programme herself because there is a real lack of systematic handwriting training out there.

Experiment 3 (ably run by my then MSc student and now PhD student Danny Leach) took the idea that letters are composed of strokes seriously and tested it. He recorded people at baseline and post training on all the letters of the alphabet and all the strokes that are required to make those letters, using their nondominant hand. He then trained half of them on writing letters and half of them on strokes (all digitally recorded this time) and looked for learning and transfer (on the assumption that if letters are made of strokes, training on strokes should transfer and vice versa).

All the analysis was confounded by an occasional bug in my analysis code (SNR wasn't being reliably recomputed by me in Matlab, and we didn't notice because it was only sometimes) so I am reanalysing everything with a version of Plamondon's code that outputs SNR as well as nbLog. This has taken months, but I am nearly done!

Some thoughts on how well this is going to go

Because I was bored on day and am a huge nerd, I decided to practice writing with my nondominant right hand. I was going to just chug through Faith's training pack but we don't need the data and I wanted to do this for a while and I wanted to read something I wouldn't otherwise, so I am currently copying out the King James Bible, beginning at Genesis.

Some things I have learned, in no particular order

Experiment 2 (ably run by an undergraduate, Faith Marsh, doing her final year project with me) was a training study. Faith took baseline recordings of people writing letters and the pangram "The quick brown fox jumped over the lazy dog" with both their dominant and nondominant hands. She then had people take packs home and practice writing every day with their nondominant hand (to avoid a ceiling effect; she made ~250 word texts with weird trivia in an effort to keep people entertained) and then repeated her measures at post training. Faith invented the training programme herself because there is a real lack of systematic handwriting training out there.

Experiment 3 (ably run by my then MSc student and now PhD student Danny Leach) took the idea that letters are composed of strokes seriously and tested it. He recorded people at baseline and post training on all the letters of the alphabet and all the strokes that are required to make those letters, using their nondominant hand. He then trained half of them on writing letters and half of them on strokes (all digitally recorded this time) and looked for learning and transfer (on the assumption that if letters are made of strokes, training on strokes should transfer and vice versa).

All the analysis was confounded by an occasional bug in my analysis code (SNR wasn't being reliably recomputed by me in Matlab, and we didn't notice because it was only sometimes) so I am reanalysing everything with a version of Plamondon's code that outputs SNR as well as nbLog. This has taken months, but I am nearly done!

Some thoughts on how well this is going to go

Because I was bored on day and am a huge nerd, I decided to practice writing with my nondominant right hand. I was going to just chug through Faith's training pack but we don't need the data and I wanted to do this for a while and I wanted to read something I wouldn't otherwise, so I am currently copying out the King James Bible, beginning at Genesis.

|

| Figure 4. I will write the whole thing, I swear to...well, God I guess |

- There are multiple learning curves. At first, I was writing with my whole hand fairly rigid and doing all the moving. I started improving, but at some point I switched and began using my fingers to move the pen without also moving the main part of my hand. This is reminiscent of the idea of freezing our degrees of freedom early in learning, and unfreezing them later on to gain flexibility and increased control. So the net result on the page being analysed by the model can actually be the product of two qualitatively different writing modes, and it would be interesting to track that transition then see if the model noticed.

- There is a very real speed-accuracy tradeoff. Because this is writing, and because some speed is a virtue, I find myself sometimes working on developing a fluid, speedy motion across the page. Other times I slow down and focus on legibility (Faith looked at this with her pangrams, at least a little). Again, it would be interesting to track that independently and see if the model notices; I'd bet the faster motions might look better behaved to the model, even if their actual legibility was suffering.

- Writing the letter 'a' by itself is not the same thing as writing it in the the word 'ark' or 'rainbow'. I've toyed with some ideas about how to easily take some recorded words and cut the recordings up into letters, and compare those to letters produced in isolation. My ideas mostly entail humans chugging through mounds of handwriting data in Matlab graphs, though; thoughts welcome.

- The letter 'g' can go fuck itself.

- It's not quite clear at what point I can consider my right handed writing to have topped out. My handwriting with my left hand is fluid and generally legible but not super neat; maybe I'll peak at 'not very good handwriting' with my right hand too (see the images).

- Watching the letters being formed helps immensely with legibility. When I read what I am writing, it is all generally clear and the writing looks ok. When I go back a few moments later and look at the static outcome, the legibility can go down and the whole thing looks a lot scrappier. Writing I was quite happy with one night looks awful the next. This reminds me of a story I heard at IU about the development of the Apple Newton and Newton II PDAs. Both had handwriting recognition software, but the first one was terrible at it. The trick that made the II work so well was to make the recognition dynamic; it didn't evaluate the final static form of the letter, it evaluated the way the letter unfolded over time. This makes intuitive sense; there are many things that count as a legible 'A' but there's only one letter in the alphabet that's drawn in that sequence.

- The Bible is weird. My favourite bit is the Cursing of Canaan. Basically, Noah gets tanked on wine and falls asleep naked. His son Ham sees him this way and his other sons cover him up without looking. Noah wakes up, is pissed off and then for some reason curse Ham's son Canaan, who wasn't even there. The obvious question is why (and the answers are many); the other question is, why is this story in the Bible?? (no answers to that one yet).

My ideas for future work mostly involve taking other measures of what the hand is up to, and looking to see if the model notices when that changes. I'm still optimistic that I'll get numbers that will track some useful things about the handwriting.

That said, handwriting is clearly a case of blissful motor abundance and this analysis is going to struggle, because it is only comparing the (highly varied but still all perfectly legible and therefore successful) output to a single idea of 'good' handwriting. The real step up will be to throw my new favourite toy, Uncontrolled Manifold Analysis, at these data, but I won't know how to do that until I've learned how to UCM my new throwing data. Still, I've learned a lot from just interacting with the data and the model and with the act of writing with my nondominant hand itself, so it's been an interesting journey into a new task.

References

No comments:

Post a Comment